The origin of the boosting method for designing learnign machines is traced back to the work of Valiant and Kearns, who posed the question of whether a weak learning algorithm, meaning one that does slightly better than random guessing, can be boosted into a strong one with a good performance index.

- At the heart of such techniques lies the base leaner, which is a week one.

Boosting consists of an iterative scheme, where

- at each step the base learn is optimally computed using a different training set;

Boosting consists of an iterative scheme, where at each step the base learner is optimally computed using a different training set;

- the set at the current iteration is generated either:

- according to an iteratively obtained data distribution or,

- usually, via a weighting of the training samples, each time using a different set of weights.

The final learner is obtained via a weighted average of all the hierarchically designed base learners.

It turns out that, given a sufficient number of iterations, one can significantly imporve the (poor) performance of a weak learner. (?)

The AdaBoost Algorithm

Our goal is to design a binay classifier:

where

where is the base classifier at iteration

, defiend in terms of a set of paramters,

, to be estimated.

- The base classifier is selected to be a binary one.

- The set of unknown paramters is obtained in a step wise approach and in a greedy way; that is:

- At each iteration step,

, we only optimize with respect to a single pair,

, by keeping the parameters,

., obtained from the previous steps, fixed.

- At each iteration step,

Note that ideally, one should optimize with respect to all the unknown parameters, simultaneously; however, this would lead to a very computationally demanding optimization task.

Assume that we are currently at the th iteration step; consider the partial sum of terms

Then we can write the following recursion:

starting from an intial condition. According to the greedy retionale, is assumed known and the goal is to optimize with resepct to the set of parameters,

.

For optimization, a loss function has to be adopted. No doube different options are avialable, giving different names to the derived algorithm. A popular loss function used for classification is the exponential loss, defined as

and it gives rise to the Adaptive Boosting algorithm. The former can be considered a (differentiable) upper bound of the (nondifferentiable) 0-1 loss function.

- Note that the exponential loss weights misclassified (

) points more heavily compared to the correctly identified ones (

).

Employing the exponential loss function, the set is obtianed via the respective empirical cost function, in the following manner:

This optimization is also performed in two steps. First , is treated fixed and we optimize with resepct to

,

where

Observe that depends neigher on

nor on

, hence it can be considered a sweight associated with sample

. Moreover, its value depends entirely on the results obtianed from the previous recursions.

The optimization depends on the specific form of the base classifier. However, due to the exponential form of the loss, and the fact that the baseclassifier is a binary one, so that , optimizaing (7.79) is readily seen to be equivalent with optimizing the following cost:

where

and is the

loss function. Note that

is the weighted empirical classification error. Obviously, when the miscalssification is minimized, the cost is also minimized, because the exponential loss weighs the misclassified points heavier.

- To guarantee that

remains the

interval, the weights are normalized to unity by dividing by the respective sum; note that this does not affect the optimization process.

In other words, can be computed in order to minize the empirical misclassification error committed by the base classifier. For base classifiers of very simple structure, such minimization is computationally feasible.

Having computed the optimal , the following are easily established from the respective definitions,

and

Taking the derivative with respect to and equating to zero results in

Once and

have been estimated, the weights for the next iteration are readily given by

where is th enormalizing factor

Looking at the way the weights are formed, one can grasp one of the major secrets underlying the AdaBoost algorithm:

- The weight associated with a training sample,

, is increased (decreased) with respect to its value at the previous iteration, depending on whether the pattern has failed (succeeded) in being classified correctly.

- Moreover, the percentatge of the dececrease (increase) depends on the value of

, which controls the relative importance in the buildup of the final classifier.

- Hard samples, which keep failing over successive iterations, gain importance in their participation in the weighted empirical error value.

- For the case of the AdaBoost, it can be shown that the training error tends to zero exponentially fast. (?)

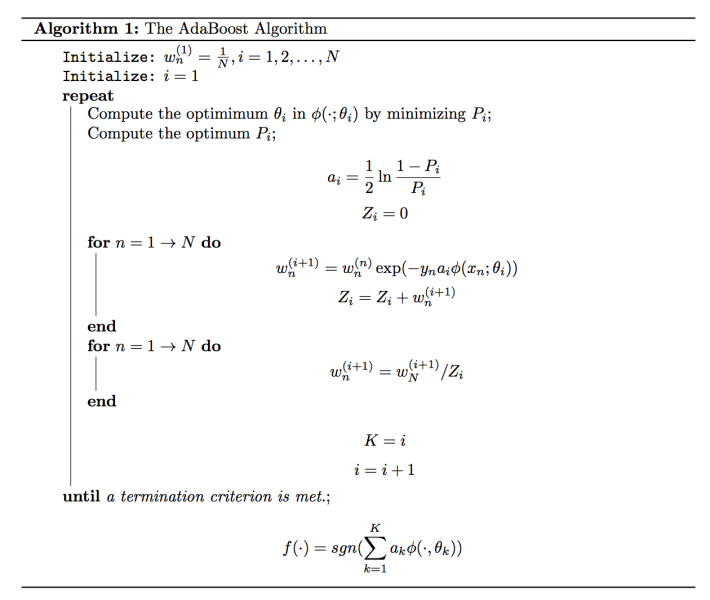

Algorithm 7.1 (The AdaBoost algorithm)

The Log-Loss Function

In AdaBoost, the exponential loss function was employed. From a theoretical poiont of view, this can be justified by teh following argument:

- Consider the mean value with respect to the binary label,

, of the exponential loss function:

Taking the derivative with resepct to and equating to zero, we readily obtain that minimum of (7.89) occurs at

The logarithm of the ratio on the right-hand side is known as the log-odds ratio. Hence, if one views the minimizing function in (7.88) as the empirical approximation of the man value in (7.89), it fully justifies considering the sign of the fucntion in (7.73) as the classification rule.

A major problem associated with the exponential loss function, as is readily seen in Figure (7.14), is that it weights heavily wrongly classified samples, depending on the value of the respective margin, defined as

Note that the farther the point is from the decision surface , the larger the value of

. Thus, points that are located at the wrong side of the decision surface

and far away are weighted with (exponentially) large values, and their influence in the optimization process is large compared to the other points. Thus in the presence of outliers, the exponential loss is not the most appropriate one. As a matter of fact, in such environments, the performance of the AdaBoost can degrade dramatically.

An alternative loss function is the log-loss or binomial deviance, defined as

which is also shown in Figure 7.14. Observe that tis increase is almost linear for large negative values. Such a function leads to a more balanced influence of the loss among all the points.

Note that the function that minimizes the mean of the log-loss, with respect to , is the same as the one give in (7.90). However, if one employes the log-loss instead of the exponential, the optimization task gets more involved, and one has to resort to gradient descent or Newton-type schemes for optimization.

Remark

Example

Implementation

Reference

AdaBoost: https://en.wikipedia.org/wiki/AdaBoost

[20]

[21]

The Boosting Trees

In the discussion on experimetnal comparison of various methods before (section 7.9), it was stated the boosted trees are among the most powerful learning schemes for clasification and data minning. Thus, it is worth spending some more time on this special type of boosting techniques.

Tree were introduced in Section 7.8. From the knowledge we have now acquired, it is not difficult to see that the output of a tree can be compactly written as

where

is the number of leaf nodes,

is the region associated with the

-leaf, after the space partition imposed by the tree,

is the respective label associated with

(output/prediction value for regression) and

is our familiar characteristic function.

- The set of parameters,

, consists of

, which are estimated during training.

- These can be obtained by selecting an appropriate cost function.

- Also, suboptimal techniques are usually employed in order to build up a tree

In a boosted tree model, the base classifier comprises a tree. In practice, one can employ trees of larger size. Of course, the size must not be bery large, in order to be closer to a weak classifier. In practice, values of

between three and eight are advisable.

The boosted tree model can be written as

where

Equation (7.94) is basically the same as (7.74), with the

‘s being equal to one. We have assumed the size of all the trees to be the smae, although this may not be necessarily the case.

Adopting a loss function, , and the greedy rationale, used for the more general boosting approach, we arrive at the following recursive scheme of optimization:

Optimization with respect to takes place into two steps:

- one with respect to

, given

, and then

- one with respect to the regions

.

The latter is a difficult task and only simplifies in very special cases. In practice, a number of approximations can be employed.

- Note that in the case of the exponential loss and the two-class classification task, the above is directly linked to the AdaBoost scheme.

For more general cases, numeric optimizatio nschemes are mobilized; see [22].

The same rationale applies for regression trees, where now loss functions for regression, such as LS or the absolute error value, are used.

- Such schemes are also known as multiple additive regression trees (MARTs). [44]

There are two critical factors concerning boosted trees.

- One is the size of the trees,

, and

- The other is the choice of

.

- Concerning the size of the trees, usually one tries different size,

, and selects the best one.

- Conserning the number of iterations, for large values, the training error may get close to zero, but the test error can increase due to overfitting. Thus, one has to stop early enough, usually by monitoringi the performance.

- Another way to cope with overfitting is to emply shrinkage methods, whcih tend to be equivalent to regularization.

- For example, In the stage-wise expansion of

used in the optimization step (7.95), one can instead adopt the following:

The parameter

take small values and it can be considered as controlling the learning rate of the boosting procedure.

- Values smaller than

are advised.

- However, the smaller the value of

, the larger the value

should be to guarantee good performance.

- Values smaller than

- For example, In the stage-wise expansion of

- Another way to cope with overfitting is to emply shrinkage methods, whcih tend to be equivalent to regularization.